Simple offline caching in Swift and Combine

Background

Following on from the previous post where we explored simple JSON decoding. In this post we are going to extend that simple networking example to include some offline caching so that if our network requests fail we can still provide content.

I hear of a lot of people put off by providing a caching layer in their apps for data. Popular solutions include putting an actual relational database inside your app to cache the data such as using Core Data or Realm. Now these solutions are fine if you are intending to levarage the power of a relational database to perform some kind of task. However they add a lot more complexity if you are simply using them as a caching layer. A few draw backs below:

- If you are consuming in an house API you may be trying to replicate a back end database structure driven by a much more capable server side DBMS.

- Whether you are mapping against a back end database or just the returned JSON. What happens when the structure changes? Do you need to update your parsing and data structure. Do you need to migrate data?

- Not only do you need to make sure you can parse and store the data correctly, you then must make sure you query the data in the same way so that you get the same results as returned from the API.

- What happens if multiple requests need to update the data? Handling concurrent data updates carries it’s own complexity and head aches.

This is just a sample of the challenges faced when trying to use a relational database as a caching layer for your app. Now you could build some custom caching layer that writes things to disk or a library such as PINCache. However what if I told you there is something simpler that is already built in to iOS as standard?

Caching Headers

To help explain this we need to explore how HTTP caching headers are intended to work. Now, most request to an API will return a bunch of HTTP headers. These provide information and advice to the receiver about the response to the request. We won’t cover them all but the one we are most interested in for this example is the Cache-Control header.

Cache-control is an HTTP header used to specify browser caching policies in both client requests and server responses. Policies include how a resource is cached, where it’s cached and its maximum age before expiring (i.e., time to live)

The part of this statement that talks about maximum age before expiring is what we will explore here. Most APIs will specify something called a max-age in the response headers. This is the length of time in seconds that the receiver should consider this information valid for. After that period of time the response should be considered stale and new data be fetched from the source.

By default URLSession and URLRequest have a cache policy of useProtocolCachePolicy. This means they will honour the HTTP caching headers when making requests. In the case of the above it will cache request responses for the time specified in the header. It is possible to override this behaviour if you wish using one of the other options.

Postman

To demonstrate this behaviour in action we are going to use a tool called Postman. You may be using this already, it’s a fantastic tool for developing and testing APIs. One of the services that are provided by Postman is something called Postman Echo. This is a service that allows you to send various URL parameters to it and have postman reply those items back to you in a certain response format. To test our example we are going to use the response headers service that is provided, this allows us to specify headers and values in the url query string and have them played back to us in the actual response headers.

If we hit the URL below, you will get a response with the specified headers that you send in the URL query params.

https://postman-echo.com/response-headers?Cache-Control=max-age=30

We get back a header in the response of Cache-Control: max-age=30. This means that anyone processing the response should cache the response for 30 seconds at the most before requesting fresh data, as discussed previously.

We can use this to prove how the caching works in URLSession.

Caching in URLSession

Let’s setup an example below on how to test out these cache headers:

// 1

let url = URL(string: "https://postman-echo.com/response-headers?Content-Type=text/html&Cache-Control=max-age=30")!

let request = URLRequest(url: url)

let task = URLSession.shared.dataTask(with: url) { (data, response, error) in

// 2

if let httpResponse = response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date"),

let cacheControl = httpResponse.value(forHTTPHeaderField: "Cache-Control") {

// 3

print("Request1 date: \(date)")

print("Request1 Cache Header: \(cacheControl)")

}

}

task.resume()

// 4

sleep(5)

// 5

let task2 = URLSession.shared.dataTask(with: url) { (data , response, error) in

if let httpResponse = response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date"),

let cacheControl = httpResponse.value(forHTTPHeaderField: "Cache-Control") {

print("Request2 date: \(date)")

print("Request2 Cache Header: \(cacheControl)")

}

}

task2.resume()Let’s step through this example step by step to demonstrate what is happening:

- First of all we setup our postman echo request, we will set this up to return cache headers of 30 seconds

- We make a request using the standard dataTask method. When we get the response we cast it to an HTTPURLResponse. An HTTPURLResponse contains a dictionary called allHeaders which is a dictionary containing all of the headers returned in the response. However this is error prone as dictionary keys are case sensitive. To combat this Apple have added a new function called value that takes a key string but does a case-insensitive match against the header so you don’t have to.

- With the code in point 2 we are grabbing the date of the response and the cache control header and printing them to the console so we can see what they are.

- We sleep for 5 seconds then perform another request.

- Here are performing the same request as above and fetching the values of the response headers again. This will help us to see how the caching works.

If we run the above code in our playground we should see the following in the console:

Request1 date: Tue, 23 Jun 2020 09:21:36 GMT

Request1 Cache Header: max-age=30

Request2 date: Tue, 23 Jun 2020 09:21:36 GMT

Request2 Cache Header: max-age=30

So what does this tell us about our requests?

- The first 2 lines show that our request executed at a certain date and time, the second line displays the cache header we configured in our postman echo request.

- The last 2 lines show the same thing?

This is because we set a cache time of 30 seconds in the request header. As you know from step 4 above, we slept for 5 seconds inbetween each request. The fact the date headers are the same shows that the second request response is in fact the same response as the first request, it has just been fetched from the cache.

To prove this we can modify the request so that we only cache the response for 3 seconds, this way when we sleep for 5 seconds, the response from the first request should be considered stale and the second request should end up with a new response.

Let’s modify the URL in our request to set the cache control to 3:

https://postman-echo.com/response-headers?Cache-Control=max-age=3

Now if we run the example above the console messages should look something like this:

Request1 date: Tue, 23 Jun 2020 11:34:58 GMT

Request1 Cache Header: max-age=3

Request2 date: Tue, 23 Jun 2020 11:35:03 GMT

Request2 Cache Header: max-age=3

How is this different from above. The main difference you will notice is that the request times are now different. The second request timestamp is 5 seconds after the first. This is because our cache time is 3 seconds now, so the second request is no longer pulling from the cache and is in fact a new request with a new response.

Offline and the Network Conditioner

Now you are probably asking yourself what this has to do with offline caching? To understand how we can leverage this caching behaviour we need to throttle our requests so that they fail. One of the tools at our disposal is something called the Network Conditioner. This is provided by Apple in the additional tools download.

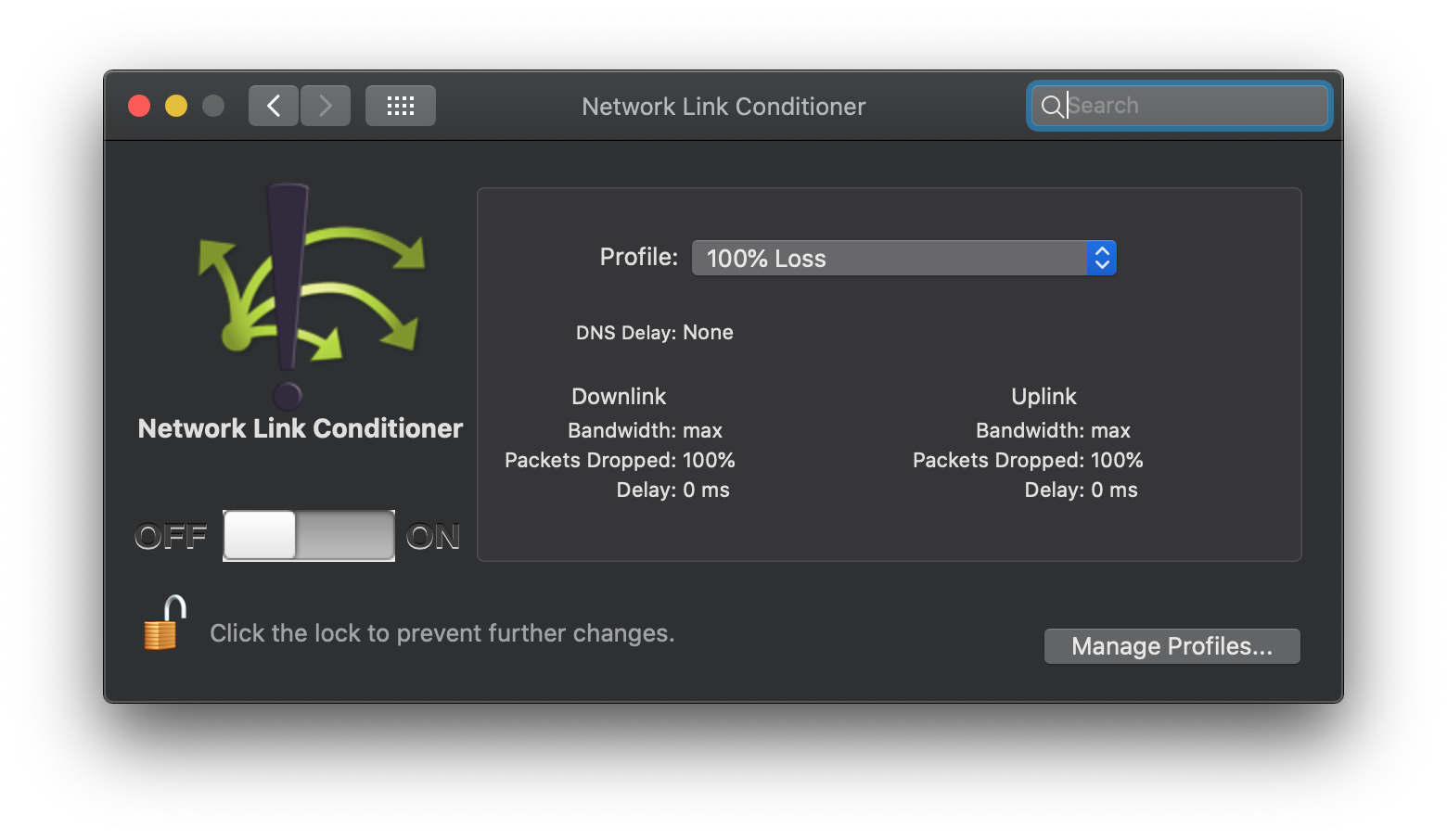

If you download the tools and install the network conditioner preference pane, you should be able to launch it from your Mac preferences. Once open you should see something like the below:

This tool allows you to create various network conditions on your mac, such as 100% packet loss, 3G, dial up etc. We are going to use this to replicate a connection failure in our example to see how we can begin to use some of URLSession’s properties to access cached request data.

If we add the below into our second request callback so we can see if the request errors:

if let error = error {

print(error.localizedDescription)

}If we run the sample again, however this time once we receive the first 2 console messages. We activate the network conditioner using 100% loss. This will cause the second request to fail (it may take a few seconds for the request to timeout).

If done correctly we should see something like below in the console:

Request1 date: Tue, 23 Jun 2020 12:36:09 GMT

Request1 Cache Header: max-age=3

The request timed out.

Now we aren’t getting a response from the second request. Instead we are receiving an error. This is expected behaviour as the second request is indeed failing. What we can do in this scenario is grab the response from the cache if we so wish. To do so add the code below to the second completion handler:

if let cachedResponse = URLSession.shared.configuration.urlCache?.cachedResponse(for: request),

let httpResponse = cachedResponse.response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date") {

print("cached: \(date)")

}Part of the URLSession is the URLSessionConfiguration. This is an object which provides all of the configuration for the URLSession. One of the attributes here is the URLCache. This is where the magic happens. It is an in-memory and on-disk cache of the responses from URLRequests. It is responsible for storing and deleting the data, in our example that is controlled via the response headers.

One of the methods on the URLCache is cachedResponse. This will return the cached response for any URL request still in the cache.

In the example above we are pulling the cached response and outputting the header of the attached HTTPURLResponse. If we take another look at our example with the additional 2 snippets above we should have something like below:

let url = URL(string: "https://postman-echo.com/response-headers?Content-Type=text/html&Cache-Control=max-age=3")!

let request = URLRequest(url: url)

let task = URLSession.shared.dataTask(with: url) { (data, response, error) in

if let httpResponse = response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date"),

let cacheControl = httpResponse.value(forHTTPHeaderField: "Cache-Control") {

print("Request1 date: \(date)")

print("Request1 Cache Header: \(cacheControl)")

}

}

task.resume()

sleep(5)

let task2 = URLSession.shared.dataTask(with: url) { (data , response, error) in

if let httpResponse = response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date"),

let cacheControl = httpResponse.value(forHTTPHeaderField: "Cache-Control") {

print("Request2 date: \(date)")

print("Request2 Cache Header: \(cacheControl)")

}

if let error = error {

print(error.localizedDescription)

if let cachedResponse = URLSession.shared.configuration.urlCache?.cachedResponse(for: request),

let httpResponse = cachedResponse.response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date") {

print("cached: \(date)")

}

}

}

task2.resume()Now if we follow the same test as before:

- Run the playground

- Wait for first request to finish

- Activate network conditioner with 100% packet loss

What we should see in the console is this:

Request1 date: Sun, 28 Jun 2020 07:03:48 GMT

Request1 Cache Header: max-age=3

The request timed out.

cached: Sun, 28 Jun 2020 07:03:48 GMT

So what is happening here?

- The first request is completing successfully so we can see the date and cache header info. The same as before.

- The seconds request is failing, hence the request timeout error

- However this time, as the request has failed we are fetching the previously made request response from the cache and outputting the header from that.

Now that we have shown how to grab cached requests from the cache, let’s wrap this up in a nice way so we can reuse it if we wish

Wrapping it up

First of all lets create a standard swift example, then we will have a look at how we can do this in Combine and some of the challenges in doing so.

extension URLSession {

// 1

func dataTask(with url: URL,

cachedResponseOnError: Bool,

completionHandler: @escaping (Data?, URLResponse?, Error?) -> Void) -> URLSessionDataTask {

return self.dataTask(with: url) { (data, response, error) in

// 2

if cachedResponseOnError,

let error = error,

let cachedResponse = self.configuration.urlCache?.cachedResponse(for: URLRequest(url: url)) {

completionHandler(cachedResponse.data, cachedResponse.response, error)

return

}

// 3

completionHandler(data, response, error)

}

}

}So let’s walk through what we are doing here:

- We have created a method the same as the standard dataTask method on URLSession. However we have added a bool to control whether we would like to return the cached response on receiving a network error.

- Here we take the example we used earlier in our example and applied it to this method. First we check whether we should return the cached response based on our cachedResponseOnError parameter. Then check to see if we do have an error, if we do then attempt to grab the cached response from the URLCache and return it’s data and response objects along with the error.

- In the case where any of the above fails we simply return everything exactly as it was in returned by the normal dataTask method.

As the completion handler returns Data, URLResponse and Error we are able to return the data and response even if there is an error. That is a bit of a disadvantage in this case as the function caller needs to be aware that they may receive an error but also the cached response as well so need to cater for those scenario themselves.

Combine

Hopefully you have at least heard of Combine even if you haven’t had chance to use it yet in a production app. It is Apple’s own version of a reactive framework. Those of you who have already been using RxSwift will be right at home. We aren’t going to go into too much detail about what Combine is but here is a definition of what reactive programming is:

In computing, reactive programming is a declarative programming paradigm concerned with data streams and the propagation of change

In more simplistic terms, reactive programming uses a observer pattern to allow classes to monitor different streams of data or state. When this state changes it emits an event with the new value which can trigger other streams to perform work or update such as UI code. If you are familier with KVO you will understand the basic concept. However reactive programming is far less painful and a lot more powerful than KVO.

Now the approach described in the previous section works fine in the case of the completionHandler as it allows us to return all 3 items to the caller regardless of what happens. However in combine streams are designed to either return a value OR an error.

First of all let’s look at a simple example:

// 1

typealias ShortOutput = URLSession.DataTaskPublisher.Output

extension URLSession {

// 2

func dataTaskPublisher(for url: URL,

cachedResponseOnError: Bool) -> AnyPublisher<ShortOutput, Error> {

return self.dataTaskPublisher(for: url)

// 3

.tryCatch { [weak self] (error) -> AnyPublisher<ShortOutput, Never> in

// 4

guard cachedResponseOnError,

let urlCache = self?.configuration.urlCache,

let cachedResponse = urlCache.cachedResponse(for: URLRequest(url: url))

else {

throw error

}

// 5

return Just(ShortOutput(

data: cachedResponse.data,

response: cachedResponse.response

)).eraseToAnyPublisher()

// 6

}.eraseToAnyPublisher()

}

}So let’s step through what is happening here:

- First I am creating a typealias of the dataTaskPublisher.Output. This is mostly for code formatting reasons as the string is very long. This is simply a Data object and a URLResponse object in a tuple.

- Here we have setup our function with the cachedResponseOnError flag the same as before. We are returning a publisher with our type aliased output.

- First we call the standard dataTaskPublisher method to setup our request. We immediately chain that publisher using the new tryCatch. So what does this do? “Handles errors from an upstream publisher by either replacing it with another publisher or throwing a new error.” So here we catch any connection errors from the dataTaskPublisher and we can either throw another error or send another publisher down the chain.

- So the same as our pure Swift example we attempt to fetch our response from the cache, except here if we fail to find anything in the cache we just rethrow the same error we received in the try catch block so it can be handled further down the stream.

- If we were able to find a response in the cache then we use the Just publisher to send the new value down the stream wrapping our cached response.

- We erase the types to AnyPublisher using type erasure so items further down the stream don’t need to know the types. For more info on type erasure see my previous post.

Now let’s take our previous test and adapt it so we can see this in action:

// 1

let url = URL(string: "https://postman-echo.com/response-headers?Content-Type=text/html&Cache-Control=max-age=3")!

let publisher = URLSession.shared.dataTaskPublisher(for: url, cachedResponseOnError: true)

// 2

let token = publisher

.sink(receiveCompletion: { (completion) in

switch completion {

case .finished:

break

case .failure(let error):

print(error.localizedDescription)

}

}) { (responseHandler: URLSession.DataTaskPublisher.Output) in

if let httpResponse = responseHandler.response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date"),

let cacheControl = httpResponse.value(forHTTPHeaderField: "Cache-Control") {

print("Request1 date: \(date)")

print("Request1 Cache Header: \(cacheControl)")

}

}

// 3

sleep(5)

// 4

let token2 = publisher

.sink(receiveCompletion: { (completion) in

switch completion {

case .finished:

break

case .failure(let error):

print(error.localizedDescription)

}

}) { (responseHandler: URLSession.DataTaskPublisher.Output) in

if let httpResponse = responseHandler.response as? HTTPURLResponse,

let date = httpResponse.value(forHTTPHeaderField: "Date"),

let cacheControl = httpResponse.value(forHTTPHeaderField: "Cache-Control") {

print("Request2 date: \(date)")

print("Request2 Cache Header: \(cacheControl)")

}

}As we have done with our other examples let’s step through and see what happens.

- First we create our request as we did in the pure Swift example, and then create our new publisher using our newly created function.

- Now in Combine, publisher’s only execute once there is an unsatisfied subscription. This happens whenever the sink function is called. The first closure is called when either the stream completes or an error throws (which also terminates the stream). The second closure is called whenever a new value is published from the stream. In this first case we can a tuple containing Data and a URLResponse. As before we inspect the date header of the request.

- As before we sleep for 5 seconds (we have set a timeout of 3 on using the cache control headers)

- This matches the same as step 2 however we changed the output.

If we follow the same steps as before and turn on packet loss using the network conditioner when we hit step 3 we should see a console log like below:

Request1 date: Tue, 30 Jun 2020 07:53:26 GMT

Request1 Cache Header: max-age=3

Request2 date: Tue, 30 Jun 2020 07:53:26 GMT

Request2 Cache Header: max-age=3

Now, this proves that we are returning our cached response in the second request as we have no connection and we are still receiving a response. However what is the problem here?

There is no error

The above implementation works fine if we just want to display cached data. However you may wish to inform the user that there was a connection failure and they are viewing old / stale information. So how can we get around this? In order to send the cached value, then the error we would need to create a custom Combine publisher. We won’t cover that here as that is a post in itself.

Conclusion

We have shown how we can make use of built in functionality of URLSession and URLCache along with the HTTP standard headers to provide simple and basic offline caching.

Advantages

- Simple implementation, doesn’t require 3rd party frameworks or complex relational databases

- Makes use of 1st party frameworks and relies on currently available standards (HTTP)

- Concurrency handled automatically

Disadvantages

- Relies on cache-control headers being correctly used in the API being consumed

- URLSession cache policies need to be configured correctly

- Doesn’t support more complex caching requirements / rules if needed

- URLCache will delete the cache if device memory becomes full, something to bear in mind using this approach

In summary, this approach is simple and provides basic offline functionality for your app. If you have more complex needs / requirements for caching your data then other approaches may be more suitable.

Feel free to download the playground and play around with the examples yourself